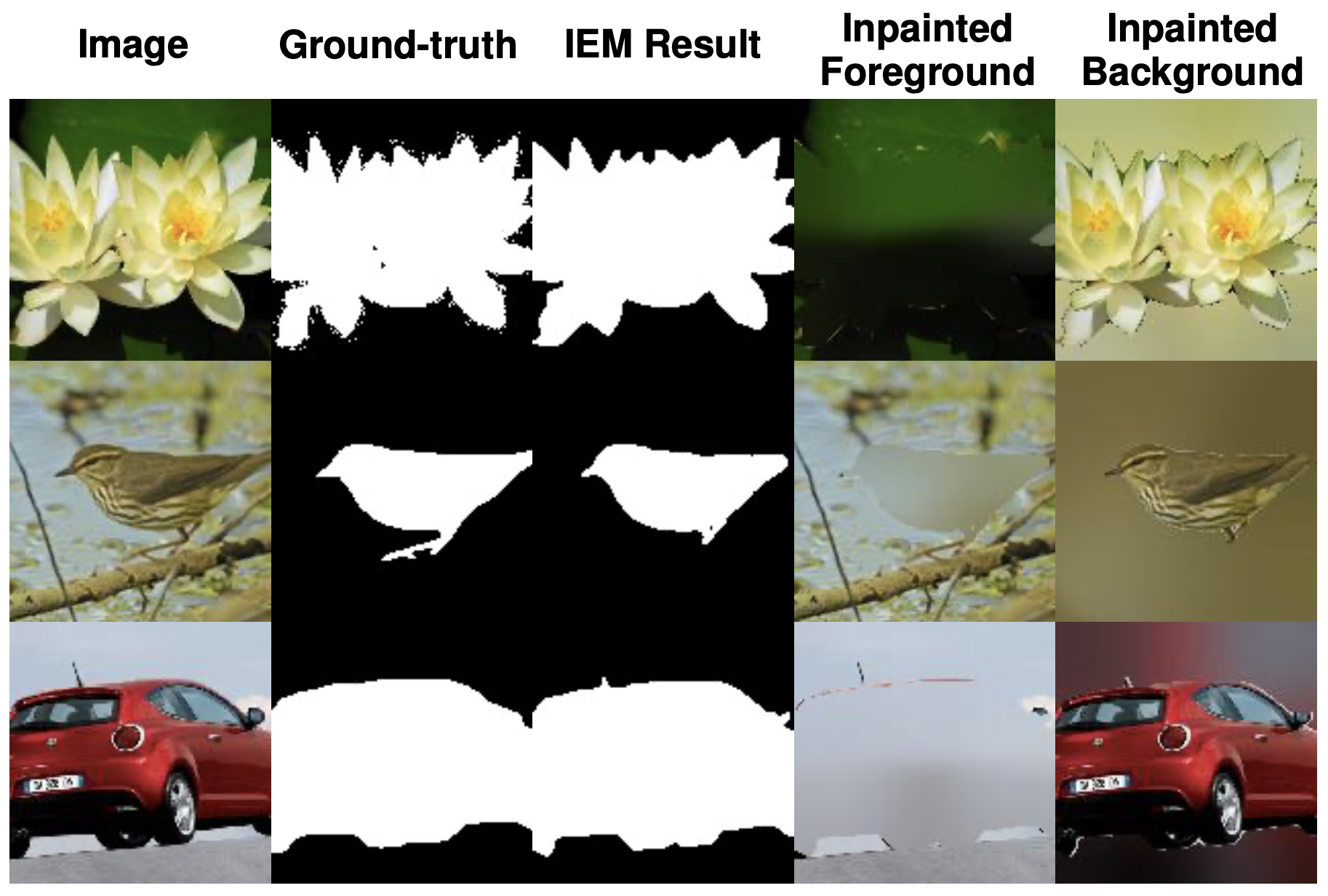

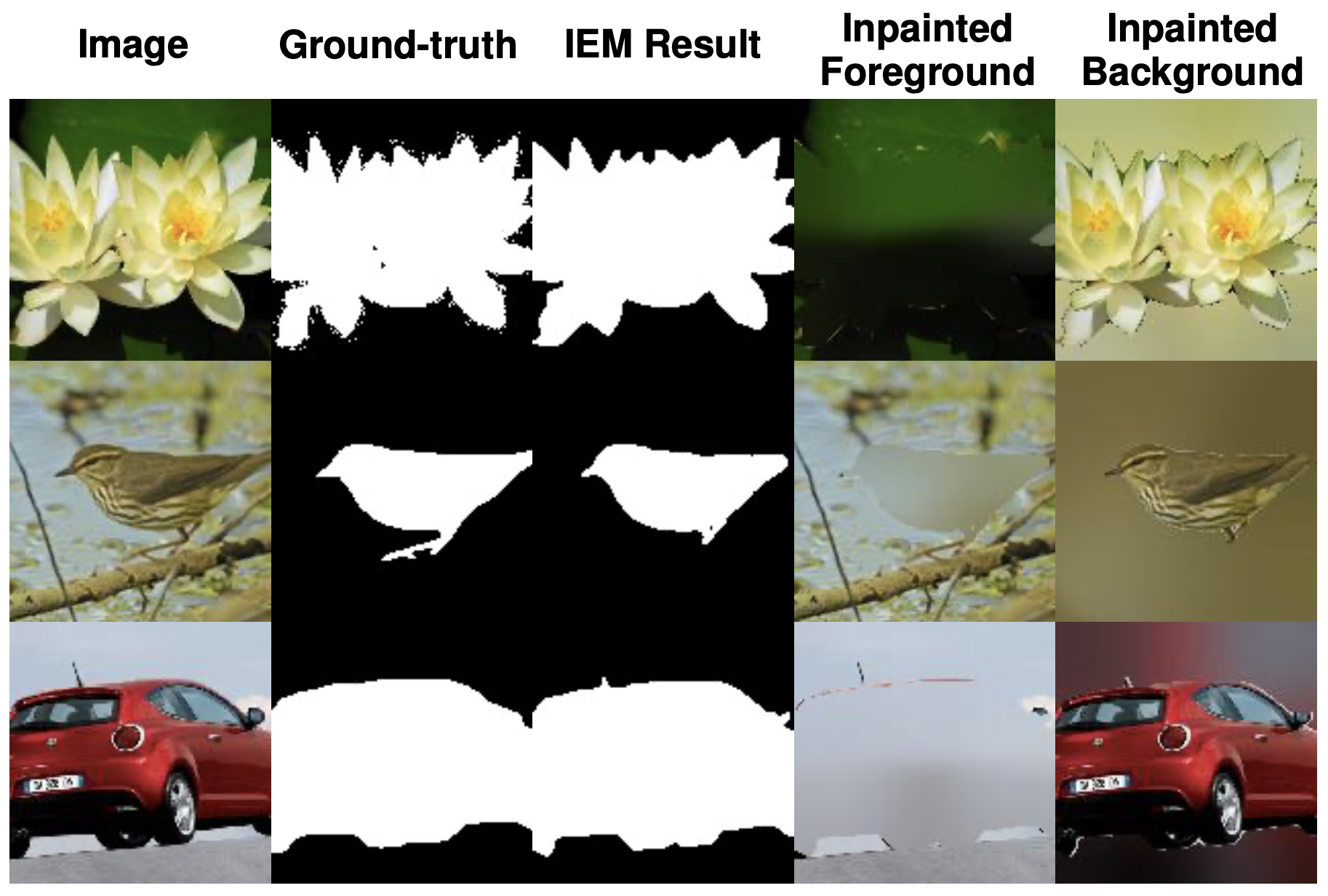

Illustration of our Inpainting Error Maximization (IEM) framework for

completely unsupervised segmentation, applied to flowers, birds, and cars.

Segmentation masks maximize the error of inpainting foreground given

background and vice-versa.

Information-theoretic segmentation

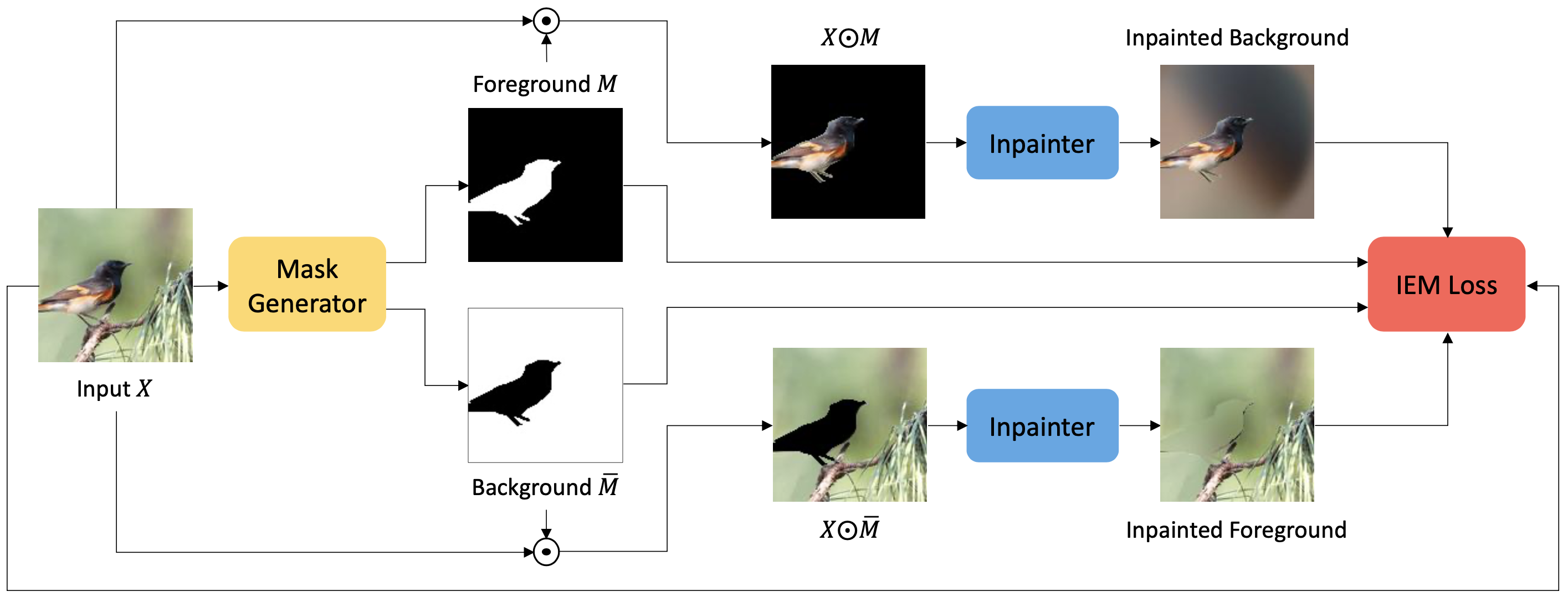

Our Inpainting Error Maximization (IEM) framework is motivated by the intuition

that a segmentation into objects minimizes the mutual information between the pixels

in the segments and hence makes inpainting of one segment given the others difficult.

This gives a natural adversarial objective where a segmenter tries to maxmize,

while an inpainter tries to minimize, inpainting error. However, rather than adopt

an adversarial training objective we found it more effective to fix a basic inpainter

and directly maximize inpainting error through a form of gradient descent on the segmentation.

IEM is learning-free and can be applied directly to any image in any domain.

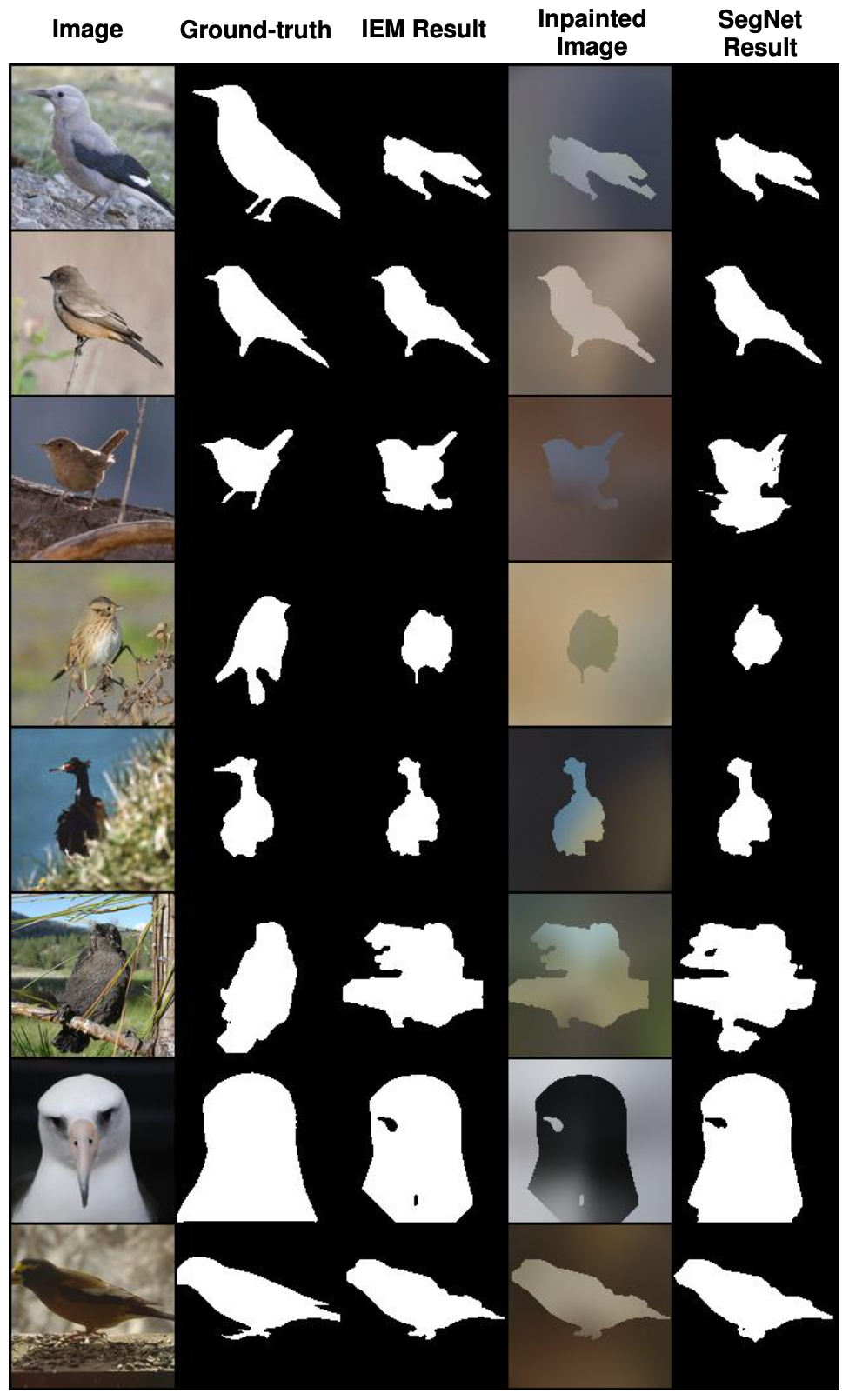

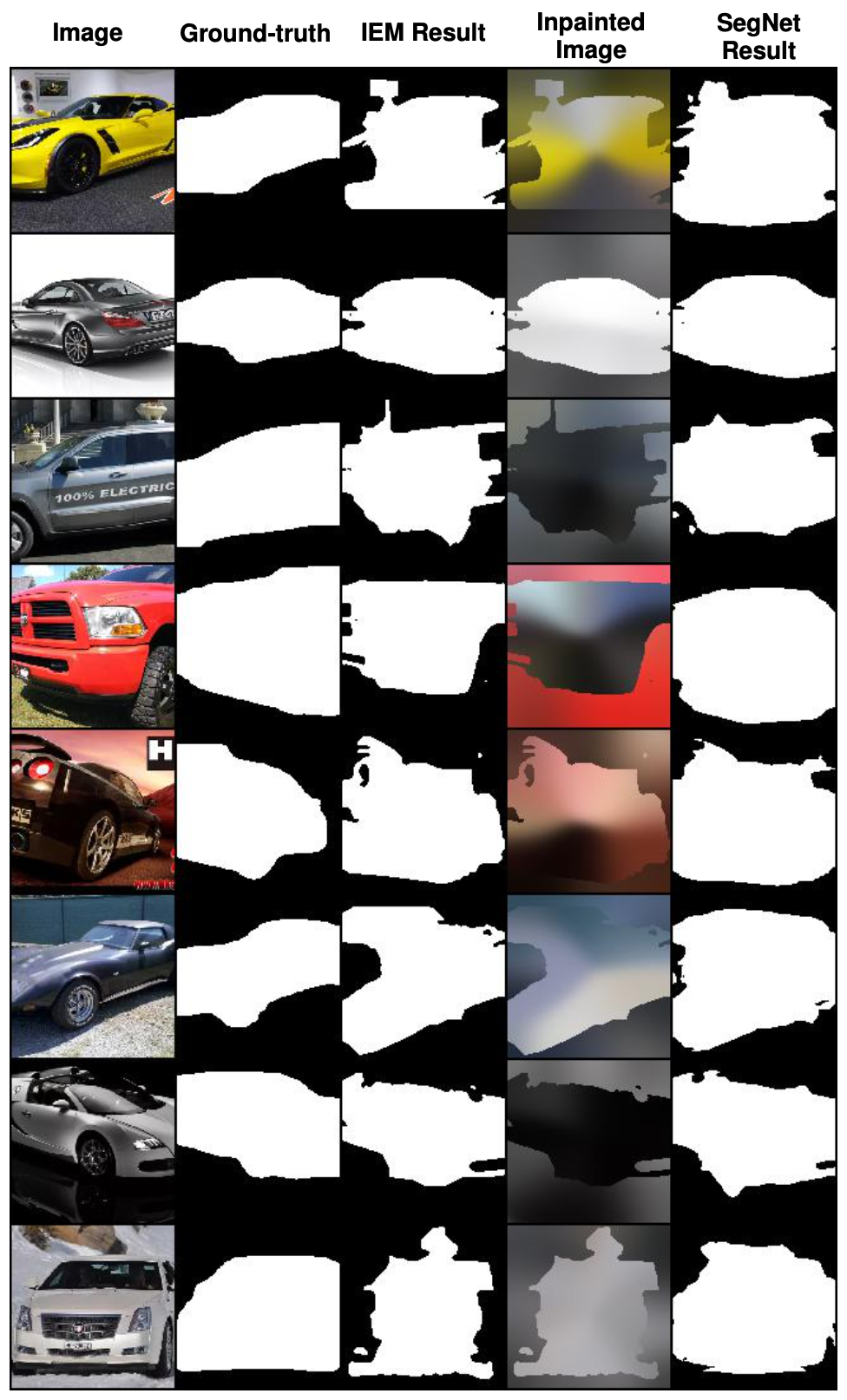

Given an unlabeled image X, a mask generator module first produces segmentation

masks (e.g., foreground M and background

M).

Each mask selects a subset of pixels from the original image by performing an

element-wise product between the mask and the image, hence partitioning the image

into regions. Inpainting modules try to reconstruct each region given all others in

the partition, and the IEM loss is defined by a weighted sum of inpainting errors.

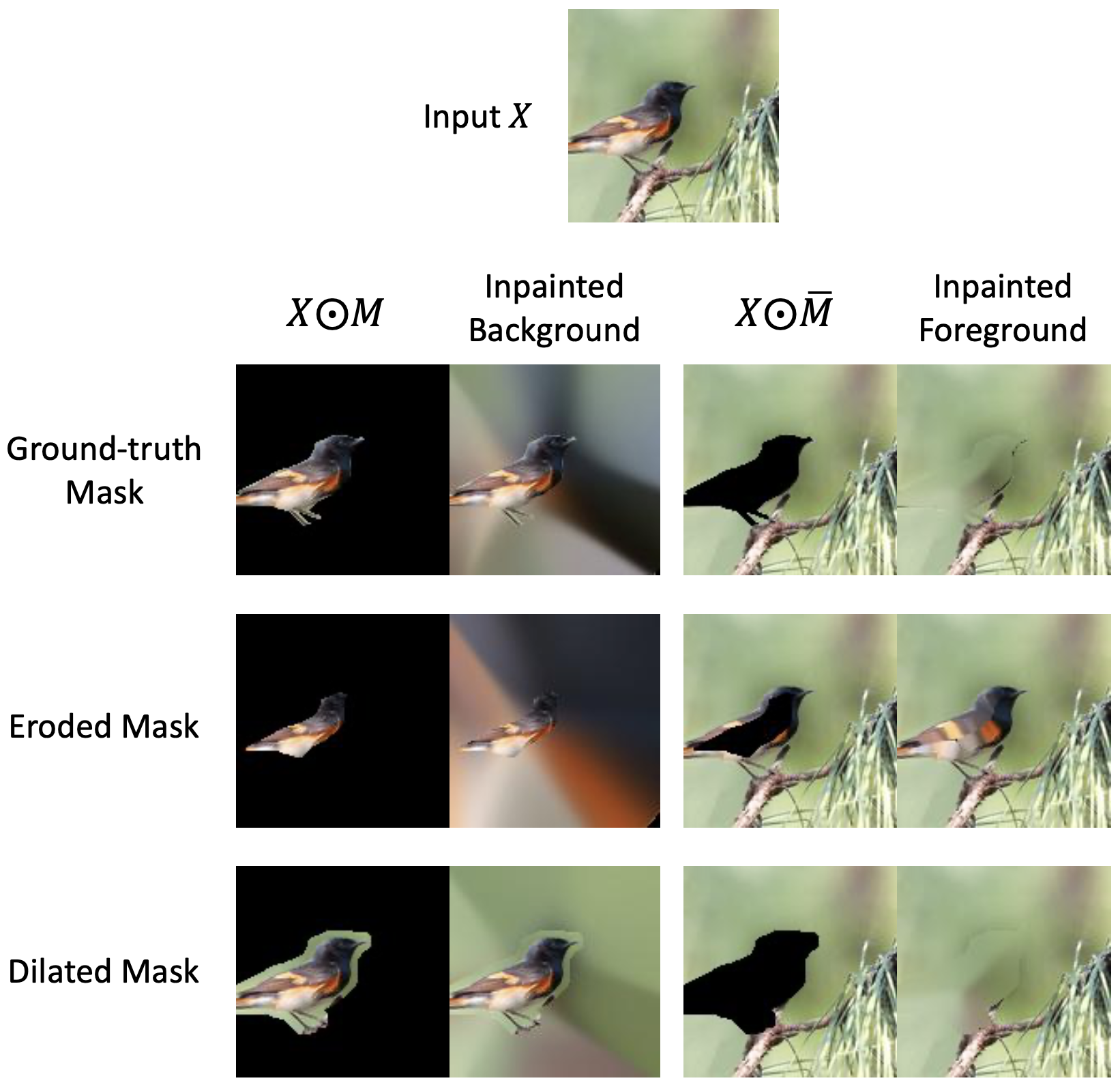

Foreground and background inpainting results using ground-truth, eroded (smaller),

and dilated (bigger) masks. We see that the ground-truth mask incurs high

inpainting error for both the foreground and the background, while the eroded

mask allows reasonable inpainting of the foreground and the dilated mask allows

reasonable inpainting of the background. Hence we expect IEM, which maximizes

the inpainting error of each partition given the others, to yield a segmentation

mask close to the ground-truth.

Results

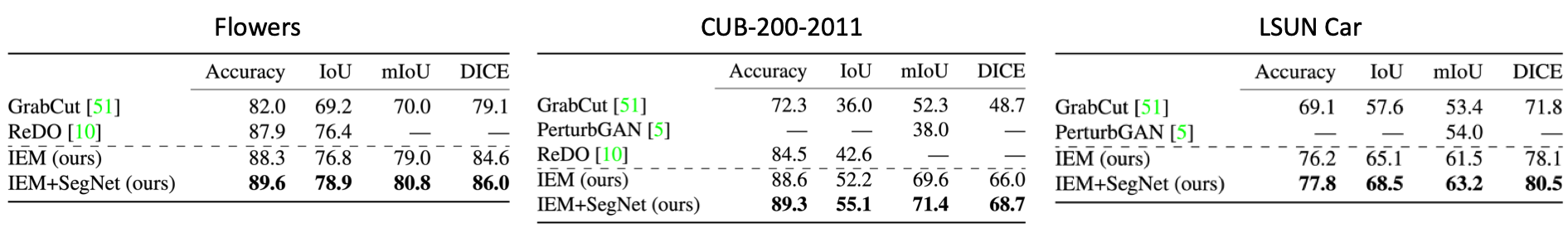

Unsupervised segmentation results on Flowers, CUB-200-2011, and LSUN Car.

Segmentation masks used for evaluation are publicly available ground-truth

(Flowers, CUB-200-2011) or automatically generated with Mask R-CNN (LSUN Car).

Related Work

Time-Supervised Primary Object Segmentation.

Yanchao Yang, Brian Lai, and Stefano Soatto. arXiv 2020.

Comment: This work segments objects in video by minimizing the mutual information between motion field partitions,

which they approximate with an adversarial inpainting network. We likewise focus on inpainting objectives, but in a manner

not anchored to trained adversaries and not reliant on video dynamics.

Inpainting Networks Learn to Separate Cells in Microscopy Images.

Steffen Wolf, Fred Hamprecht and Jan Funke. BMVC 2020.

Comment: This work segment cells in microscopy images by minimizing a measure of information gain between partitions.

While philosophically aligned in terms of objective, our optimization strategy and algorithm differs from this work.